|

Archive: January 2023

|

Happy New Year's! Woo hoo, we made it out of 2022 and on into 2023! Happy New Year's! Woo hoo, we made it out of 2022 and on into 2023!

It rained super hard last night, and just before midnight I captured this photo of the magnificent old oak tree in our backyard. It suddenly struck me - this tree is not only older than the whole neighborhood surrounding it, not only older than me, but older than anyone alive, much much older, in fact older than the United States, older than California had been settled by Europeans. Older than almost all of what we consider "history". And yet there is stands, in the rain, hanging out, waiting patiently for the next trip around the sun, and the next, and the next. Supercool.

So ... what did you do last night? We had a nice dinner - earlyish - then came home and hung out 'till midnight, not too exciting, but nice. It was great that New Year's Eve in Times Square featured a band I actually knew and liked (Duran Duran). So ... what did you do last night? We had a nice dinner - earlyish - then came home and hung out 'till midnight, not too exciting, but nice. It was great that New Year's Eve in Times Square featured a band I actually knew and liked (Duran Duran).

Today there was a lot of football to watch - I used to be a bigger fan, now I'm mostly just a College Bowls and Pro Playoffs watcher - tomorrow no bowls (yeah, it's Sunday, and while this tradition of moving the New Year's Day bowls to Monday when New Year's falls on a Sunday began for religious reasons, now its so that college and pro games don't overlap - and then Monday, the Rose Parade and the New Years' stuff. Lots of watching and eating coming up.

Also great - Shirley made Oliebollen, a kind of Dutch donut traditionally made on New Year's Eve - yes they are as good as they look, and no calories of course. Also great - Shirley made Oliebollen, a kind of Dutch donut traditionally made on New Year's Eve - yes they are as good as they look, and no calories of course.

And so we move on into 2023 ... what will it bring? Stay tuned!

|

|

keeping track ... in the archive ... check it out Checking in ... welcome to blogging in 2023.

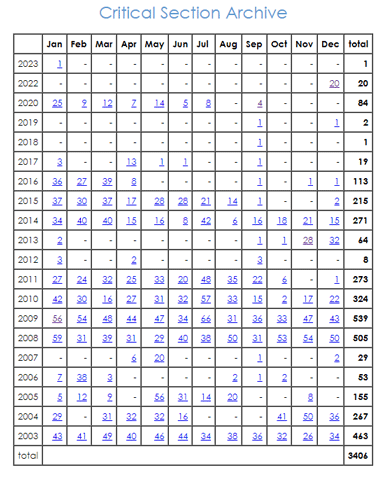

I began blogging on Jan 1, 2003, so it's now been 20 years! Wow. Not continuously - as a quick peek at the archive will reveal, there have been gaps - but definitely more on than off. During that time I've made 3,406 posts containing 10,771 images. There are 24,965 links, of which only 912 are back to other content on this blog. (It would be interesting to determine how many of those links have died ... should be possible to do ... made slightly harder by the fact that some sites don't return a 404 when content is missing.)

At various times I've paid more or less attention to traffic; for some time now I have used Google Analytics, which is pretty good; it tells me I currently get about 500 unique visitors a day, who conduct 600 sessions, which average 1:32. So be it. This doesn't measure RSS and I actually think, based on the survey I just ran, that I get way more inbound from there than from search engines. I also get linked back from Twitter, where I post a link back to every new item; no stats on that, but I should dig deeper.

Hitting a new year means I have to roll over all those "this date in" links at the top of the sidebar - who knew I would someday have 20 of them! - and revisiting old stuff remains one of the most fun things about blogging. My flight feature gets a lot of use, at least from me (show me what I posted this day every year).

It's a little fun to remember all the stuff I used to do: frames (yikes), blogrolls (we hardly knew ya - but OPML lives on), blog roulette (pick two blogs from the blogroll and feature them), and lots of under-the-covers performance optimization from when I hosted this blog myself (it lives at AWS now). Just recently I got rid of another complication - I used to serve a skinnied down version of each page to robots (now they get what everyone gets).

And so onward in 2023 ... let's see what happens.

(Anyone care to guess whether I'll be blogging in 2043? Stay tuned!)

|

|

It's the first filter pass of the new year ... lots going on. Many (many!) year-in-review articles out there, and many (many!) what's-going-to-happen-next articles and posts, too. The big trends I see are 1) crypto is over, the pyramid is finally collapsing, and 2) tech investment has retrenched, we seem back to a more normal situation where good companies can raise money but bad companies (and non-companies) cannot.

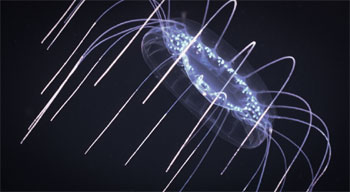

Mashable: The deep sea discoveries and sightings of 2022. "You're always finding things you haven't seen before." Mashable: The deep sea discoveries and sightings of 2022. "You're always finding things you haven't seen before."

The Federalist: 10 wins in 2022. Many more losses, though...

Nonzero News: Tweet of the year. Sadly, a good call. Nonzero News: Tweet of the year. Sadly, a good call.

Ars Technica: 10 best cars we drove in 2022. Eight of the ten are EVs, zero of them are Teslas, #1 was a Kia. Who would have thought?

A recurring theme in 2022 has been the governments' reactions to Covid. David Sacks retweets Elon Musk regarding Anthony Fauci. To me it seems likely that the Wuhan flu virus was synthetic, and accidentally escaped the lab where it was created for "gain of function" research. Sounds like a movie, and it stopped the whole world for a while. A recurring theme in 2022 has been the governments' reactions to Covid. David Sacks retweets Elon Musk regarding Anthony Fauci. To me it seems likely that the Wuhan flu virus was synthetic, and accidentally escaped the lab where it was created for "gain of function" research. Sounds like a movie, and it stopped the whole world for a while.

Here's an interesting Tweet from Joscha Bach. I'm a huge fan of Wikipedia and never would have known this. Did you? Here's an interesting Tweet from Joscha Bach. I'm a huge fan of Wikipedia and never would have known this. Did you?

Taxprof Blog: The Myth of American Income Inequality. "Real income of the bottom quintile, the authors write, grew more than 681% from 1967 to 2017. The percentage of people living in poverty fell from 32% in 1947 to 15% in 1967 to only 1.1% in 2017." Economic growth has been a rising tide; all boats aren't lifted equally, but all boats *are* lifted. Definitely conflicts with the prevailing narrative, huh?

2022 was the year of many things, among them, SpaceX launches became commonplace, as well as their successful landings and subsequent reuse of boosters. Teslarati note their 61st launch of 2022 tied a 42-year-old record set by the USSR. 2022 was the year of many things, among them, SpaceX launches became commonplace, as well as their successful landings and subsequent reuse of boosters. Teslarati note their 61st launch of 2022 tied a 42-year-old record set by the USSR.

Ottmar Liebert: 2022. I link because he links the year in cheer, 183 ways the world got better, and New Atlas' best photos of 2022.

Panda's Thumb: Breakthroughs of 2022. "I consider a miracle to be something that I understand in some detail and know it is impossible, yet there it is in my hand for $200 – a digital camera, for example." He links Science: Breakthrough of the year ... the James Webb telescope:

BusinessWeek: How not to play the game. Yeah, the FTX collapse, etc., but there's a bigger picture, as this article points out. "What makes this problem so hard in a crypto financial system is that there are no economic fundamentals." I'm not sure there's an actual problem, there's just no there there.

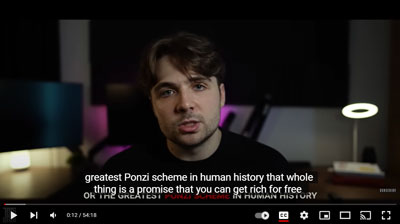

Liron Shapira: So long crypto, thanks for the memories. This Tweet has been viewed 456K times. You should too, click thought and watch the video. It's dead on. Liron Shapira: So long crypto, thanks for the memories. This Tweet has been viewed 456K times. You should too, click thought and watch the video. It's dead on.

Miguel de Icaza: the truly amazing part about crypto is how they scaled scamming. "340,000 people in this last round trusted their money to good looking web sites."

As a known "techie" my friends often ask me about crypto, and I've always said I don't get it. By which I mean, I know how blockchains work, but I don't understand how ICOs and NFTs and DAOs create value. And I still don't.

Brad Feld: What just happened. "If you're a fan of Harry Potter, think of 2022 as the sorting ceremony." He thinks 2023 will be like 2000. For crypto, it already is.

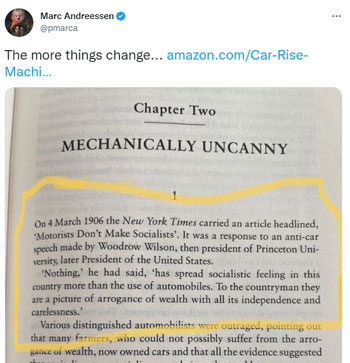

Finally, from Marc Andreessen: The more things change... Finally, from Marc Andreessen: The more things change...

Definitely worth keeping in mind as we advance into the new year. Some things like crypto are passing fads, others, like AI, are new things which genuinely create new value.

Ands RealClearPolitics note: Chase the American Dream in 2023. "People across the United States share a positive and hopeful narrative about the American Dream. In 2022, most Americans said they either have achieved or are on their way to achieving the American Dream. Only a small minority, 18%, said it is out of reach. Notably, this trend was consistent across age groups, race, education, and income."

|

|

Rain! Yep, lot's of rain. Lot's of storms. Lot's of weather. Yikes... Rain! Yep, lot's of rain. Lot's of storms. Lot's of weather. Yikes...

Reading my flight of posts from years past, I guess yeah we do get winter in California and yeah it does often rain right after New Years'. The pic at right is downtown LA, post storm. Beautiful huh? You often see those mountains and don't think of them, but you don't often see them covered with snow.

I was supposed to go sailing tomorrow - first race of the season, the Hot Rum - but Santa Barbara harbor is silted closed and the yacht club itself is surrounded by water. It sits on stilts on the beach, and has done so for a long time, so I think the building will be okay, but the parking lot, beach, boatyard, etc. will need some recovery time. Wow.

|

|

So it's crummy weather and being a candy-ass Californian (instead of a hardy Dutchman!) I have resorted to Zwifting instead of riding outside. So it's crummy weather and being a candy-ass Californian (instead of a hardy Dutchman!) I have resorted to Zwifting instead of riding outside.

The pic at left shows my setup; a Wahoo Kickr "smart trainer" that holds my bike, measures my power output, and simulates riding conditions like climbing hills and wind, a cadence sensor that goes in my sock, and a heart rate monitor. I can use my Renovo road bike "as is" in this setup, easy in, easy out when I want to do a "real" ride outside.

Zwift has a bunch of riding terrain on their made-up island of Watopia, but also recently have digitized some real places to ride like France, Italy, and Innsbruck, Austria. The overall experience isn't exactly like riding outside, but it's not bad. Especially when exactly like outside would mean riding in a storm. And the best part about Zwift is there are other people - just like me - out there riding, and we're all together. Kind of fun to pass people from all over the world (well, and also getting passed by them :)

Zwift nerd note - if you look closely at this picture, you can see I'm wearing a KOM jersey. Don't ask!

|

|

Making a filter pass on a rather soggy day ... we'll see how it goes. Making a filter pass on a rather soggy day ... we'll see how it goes.

I recently discovered Dutch Bros coffee! How did I not know about them?

SpaceX (and Elon Musk) celebrated their 61st launch of 2022, and their 61st successful one too. Wow, just wow. Remember when they were working on launch 1? SpaceX (and Elon Musk) celebrated their 61st launch of 2022, and their 61st successful one too. Wow, just wow. Remember when they were working on launch 1?

Putting in a marker here ... over time, SpaceX might well become one of the largest and most successful companies of all time.

Not everyone is excited about space, Maciej writes why not Mars, in which he attempts to answer that question. (via John Gruber) And to be clear, his objection is to manned travel, which is admittedly not a bad point. But if we abandon manned space travel, what's next for us? (And how will I ever get to Titan?)

An interesting reminder: our first image of Mars was a paint-by-numbers pastel drawing. My father worked at JPL at the time of Mariner 4, I remember it well! An interesting reminder: our first image of Mars was a paint-by-numbers pastel drawing. My father worked at JPL at the time of Mariner 4, I remember it well!

I've been working on a cool project which involves using NVidia's CUDA library to accelerate processing by offloading parallel tasks to a GPU. (More on this to come...) GPUs are being used for this all over the place, especially in connection with AI tasks; computing a neural network is a perfect parallel operation. (Essentially what are brains are doing all the time.) But despite this, Slashdot reports desktop GPU sales hit 20-year low.

Related: Portland startup to mine artisanal bitcoin using only slide rules and graph paper. Excellent!

It's the blue people! Avatar: The Way of Water surpasses $1B mark globally. Not surprising to me - it was a good movie. And a good reason to go to a theater, with the spectacle and 3D and everything. This is not a movie where you say "oh I'll wait for it to be on Netflix". It's the blue people! Avatar: The Way of Water surpasses $1B mark globally. Not surprising to me - it was a good movie. And a good reason to go to a theater, with the spectacle and 3D and everything. This is not a movie where you say "oh I'll wait for it to be on Netflix".

Speaking of Netflix, "Emily in Paris": why it's so hard to admit love for the show. Salon's tagline: "at best is something of an embarrassment, at worst living embodiment of cultural imperialism." Heh. I'm not sure I love it, but I've liked it a lot. It's entertaining, and that's all it has to be. Speaking of Netflix, "Emily in Paris": why it's so hard to admit love for the show. Salon's tagline: "at best is something of an embarrassment, at worst living embodiment of cultural imperialism." Heh. I'm not sure I love it, but I've liked it a lot. It's entertaining, and that's all it has to be.

|

|

This weekend I [re]discovered a latent interest in Football. I honestly haven't watched much since the pandemic, missed the bowls and playoffs at the end of 2020, when they were moving around and being rescheduled and cancelled and everything, and didn't pay any attention last year, for ... reasons. (And to be fair, spent January sailing in Florida - a story to be told here at some point - so I was otherwise occupied.) This weekend I [re]discovered a latent interest in Football. I honestly haven't watched much since the pandemic, missed the bowls and playoffs at the end of 2020, when they were moving around and being rescheduled and cancelled and everything, and didn't pay any attention last year, for ... reasons. (And to be fair, spent January sailing in Florida - a story to be told here at some point - so I was otherwise occupied.)

Anyway, yeah, my DVR has tens if not hundreds of college bowls queued up, all the way through the big ones last weekend, and now pro games too, just in time for the playoffs. It's a good combination with Zwifting; you can ride and watch at the same time, both activities only require fractional brainpower.

One big changed I haven't blogged about here yet is that during the pandemic we moved - not far, still in Westlake Village, CA - and in the process have now fully embraced AT&T DirectTV streaming as our "cable", and shed our old Tivos. So we now have a cloud-based DVR. I always loved Tivo - was a super early adopter, as you know - but I have to admit this is nice. Including being able to easily watch on my computer.

[grumpy sidenote: HEIC, WTF? - did we need a new image format? No, we did not... and why oh why did Apple decide to make it the default on their phones?]

|

|

Hi, I'm Ole, and I'm a crypto grump. I know how it works - better than most people - and I understand blockchains and cryptography and all the tech, and I appreciate the potential for having a digital currency used as a value exchange intermediary which isn't backed by physical assets like gold. Hi, I'm Ole, and I'm a crypto grump. I know how it works - better than most people - and I understand blockchains and cryptography and all the tech, and I appreciate the potential for having a digital currency used as a value exchange intermediary which isn't backed by physical assets like gold.

But. The crypto fad of the last few years doesn't have anything to do with any of that. It's a distributed pyramid scheme, and as an official crypto grump I do not see any value being created. Tell me all about ICOs and NFTs and DAOs - go ahead. Extol Web3 to me. No. Value. Created. Watch the video linked at right - it's excellent - and see if you don't agree.

So I'm watching the Rose Parade and in the middle of all the floats from big companies and cities and charities, there's a float from nouns.wtf*. Maybe you've heard of them? If not, let me tell you - it's a beautiful example of the whole scheme in action. So I'm watching the Rose Parade and in the middle of all the floats from big companies and cities and charities, there's a float from nouns.wtf*. Maybe you've heard of them? If not, let me tell you - it's a beautiful example of the whole scheme in action.

You might think a noun is a part of speech, but also, it's a 32x32 pixel caricature of a little person, formed from a random combination of features like head shape, glasses, body, etc. At right is Noun #563, which was "minted" on Jan 1, 2023. Every day a new Noun is born and auctioned off, and every day someone wins the auction and buys one. This particular Noun was bought for 35.0 ethereum (ETH), which on Jan 1, 2023 was worth about $47K. Huh. You might think a noun is a part of speech, but also, it's a 32x32 pixel caricature of a little person, formed from a random combination of features like head shape, glasses, body, etc. At right is Noun #563, which was "minted" on Jan 1, 2023. Every day a new Noun is born and auctioned off, and every day someone wins the auction and buys one. This particular Noun was bought for 35.0 ethereum (ETH), which on Jan 1, 2023 was worth about $47K. Huh.

So what did that person get for their money? The rights to the image, amazing and beautiful as it is? Well no, Nouns are open and available to everyone. You could use it yourself, right now, for $0. All they got was an entry in the ethereum blockchain, which they could potentially sell to someone else. So far about 570 Nouns have been created, and 126 transactions have taken place after the initial auction, so it would be hard to say that they are in demand. But every day there's an auction for a new one, and people bid, and someone wins. No. Value. Created.

What about that parade float? Well if you auction off 570 Nouns at $47K or so each, you can pay for one. Just now the Noun treasury contains 28,580 ETH, worth $38M.

My favorite part of this "project" is that there are ten "nounders", and every tenth Noun goes to them. They are paying themselves each about $5K every ten days. And they're proud of it ... Such a deal! It's good to be living on the tip of the pyramid.

There are hundreds of such scams operating in parallel. Everyone is buying tokens for X today hoping they'll be worth more than X tomorrow. And they will be for a while. Just get out fast, because the whole thing is unsustainable. No. Value. Created.

So, what about all the VCs who are investing in crypto? Well VCs always live at the tip of the pyramid, this is perfect for them. They are smart people, they are not confused. They will get paid in tokens, they will sell their tokens, and they will get out early, before the stuff hits the fan. When the music stops it will be ordinary retail investors who end up owning those entries in the blockchain and desperately trying to find buyers.

As a final note, for some reason some people choose to compare AI to crypto, maybe because they're related in time. But don't be confused. As Miguel de Icaza notes: AI is hyped because it is useful and shows a lot of promise. Crypto was hyped because scammers and swindlers found they could make a killing. They are not the same thing.

* BTW yeah, there is a "wtf" TLD, who knew? Perfect for crypto projects!

|

|

Wow, still wet out there. All over. With more rain in the forecast. I love rain, but not all at once, please.

So I posted about being a crypto grump, but I'm also an AI cheerleader. If you've played at all with DALL-E or ChatGPT or any of these sorts of tools, you can immediately see the incredible value being created. (BTW, I use AI as shorthand for AI/ML, hope you're okay with that.) As Steven Levi notes in Wired, Welcome to the Wet Hot AI Chatbot Summer. (He's drawing a parallel to the so-called AI Winter...) So I posted about being a crypto grump, but I'm also an AI cheerleader. If you've played at all with DALL-E or ChatGPT or any of these sorts of tools, you can immediately see the incredible value being created. (BTW, I use AI as shorthand for AI/ML, hope you're okay with that.) As Steven Levi notes in Wired, Welcome to the Wet Hot AI Chatbot Summer. (He's drawing a parallel to the so-called AI Winter...)

BTW the worst part of using the amazing OpenAI tools is having to go through their login captcha. Every time. Argh.

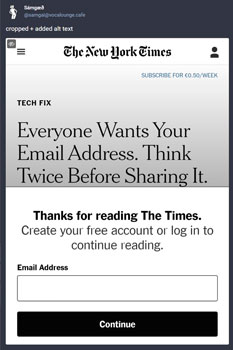

Ah, you got me started. Not only are captchas bad, but passwords are bad. (OpenAI requires both.) Fortunately the Internet is kind of settling in to a "text message confirmation = password" alternative. But another problem remains - the "login or create new account" dilemma. I've created accounts on hundreds if not thousands of websites and of course I can't remember. Make it easy for me, please; if I enter an email and password and you don't know me, ask me if I want to create an account. How hard is that?

Salon: An AI that can "write" is feeding delusions about how smart artificial intelligence really is. We certainly have a chorus of professional writers disclaiming AI's ability to replace them. I think we should ask ChatGPT to write an article, in the style of Salon, about how AI is not really intelligent. Oh, wait... [Later: someone did :)]

As you know I'm a close follower of the Iditarod, and recently I got an email from them asking "who's ready for Iditarod 2023?" Great question. And, not me, yet. If you've hung around here in the past you know I traditionally post about the Iditarod, and have even created a little race tracker to help follow the action. Which I did do in 2020 - it was just before the pandemic became "the pandemic" - and did do in 2021 - a weird year with the Iditarod being one of the few sporting events still held - but which I did not do last year - I was sailing in Florida. But this year I'd like to do again ... time to get ready! As you know I'm a close follower of the Iditarod, and recently I got an email from them asking "who's ready for Iditarod 2023?" Great question. And, not me, yet. If you've hung around here in the past you know I traditionally post about the Iditarod, and have even created a little race tracker to help follow the action. Which I did do in 2020 - it was just before the pandemic became "the pandemic" - and did do in 2021 - a weird year with the Iditarod being one of the few sporting events still held - but which I did not do last year - I was sailing in Florida. But this year I'd like to do again ... time to get ready!

Excellent! Boom supersonic unveils new Symphony engine for faster-than-sound Overture airliner. Having lived through the whole SST era, I can't believe we don't have these yet... Excellent! Boom supersonic unveils new Symphony engine for faster-than-sound Overture airliner. Having lived through the whole SST era, I can't believe we don't have these yet...

Henry Kissinger: how to avoid another war. "End the Ukraine War by trading NATO admission for Ukraine in exchange for Crimea and Donbas staying with Russia." Ah yes, diplomacy. [via David Sacks, my old PayPal colleague who has rapidly become one of my favorite Tweeters]

Along with crypto and AI, another present-day technology gathering headlines is AR/VR. Like AI it's been around for a bit, but unlike AI it hasn't found any killer apps. Yet. I think the form factor is the problem - people don't like wearing things on their head. Once we have reliable implants to inject video and audio directly into our brains, this problem will disappear :) but for the moment, it's a big hurdle. There are other problem too; Wired has a report from a metaverse skeptic: I Threw a Holiday Party in Horizon Worlds. It Didn't Go Well.

Another new technology gains traction: My Dystopian Ride In San Francisco's Fully Driverless Cars. As a happy Tesla Model S owner with Enhanced Autopilot, I feel this is now only a matter of time. There's a big difference between the car driving for you - while you are sitting there - and having no driver at all. Another new technology gains traction: My Dystopian Ride In San Francisco's Fully Driverless Cars. As a happy Tesla Model S owner with Enhanced Autopilot, I feel this is now only a matter of time. There's a big difference between the car driving for you - while you are sitting there - and having no driver at all.

John Battelle:The Next First Day. "I'm resolved to come back to this patch of land and dig around. I don't expect anyone to notice, and that’s OK. I need to till the soil, clear the rocks and roots, and find out what might grow here." Yeah, me too!

|

|

When the neutrino was first detected, renowned physicist I.I.Rabi had the perfect reaction: "who ordered that?" It was a confusing new thing and it wasn't immediately apparent to anyone what to do with it. When the neutrino was first detected, renowned physicist I.I.Rabi had the perfect reaction: "who ordered that?" It was a confusing new thing and it wasn't immediately apparent to anyone what to do with it.

Well that's sort of the way I reacted to Twitter, I'm still a rather reluctant late adopter, and (like most people) I follow way more than I react or post.

Now we have Mastodon! Yay. And my first reaction is: "who ordered that?"

It's an open-source distributed messaging system which has a lot of similarity to Twitter. Anyone can post anything, link to anything, and comment on anything. There are likes (favorites) and "retweets". Pictures and videos. And there is a content moderation protocol which is designed to keep a certain level of sanity - eliminate child pornography, for example.

People who were unhappy with Elon Musk's acquisition of Twitter have searched for alternatives, and to date Mastodon has emerged as the most popular alternative. It's vastly smaller than Twitter, and there's such a huge network effect already in place that it's impossible to imagine Twitter being supplanted. But unlike some moves in the physical world - like moving to Canada - online you can be in two places at once.

The biggest difference is that with Twitter, the whole thing is operated by one company, whereas with Mastodon there is a network of servers cooperatively communicating, but each operated by separate companies or organizations. Kind of like with email, you have to choose who you want to be "your host", and then after that you can interoperate. Your host is responsible for managing your account; it could go off the air, taking you with it. And it decides what to moderate - different hosts have different policies.

I decided to see what the fuss is all about, and opened an account: ole@universeodon.com. Now I can post there, and read Mastodon messages, and follow people there. Yay. But if I want to check on flooding in Santa Barbara, Twitter is going to have way better information. Way more junk and ads too, but so it goes.

[Update, yay, dlvr.it supports Mastodon. So now when I post here, a link is relayed and posted there, just like with Twitter.] [Update, yay, dlvr.it supports Mastodon. So now when I post here, a link is relayed and posted there, just like with Twitter.]

|

|

When I started blogging again last December I had a backlog of "interesting stuff" to relay. Some of it was interesting at the time, but the interestingness was timeframe specific. Other things are interesting exactly because they provide a point-in-time snapshot. And some things are just interesting!

June 2020: Facebook and PayPal invest in Southeast Asian ride-hailing giant Gojek. Biggest country you never think about: Indonesia. Fourth biggest by population. An island nation where everyone has a cellphone and most everyone has Internet access. Gojek are way more than a ride-hailing service, they're on the path to be an "everything app" like WeChat. This makes sense. June 2020: Facebook and PayPal invest in Southeast Asian ride-hailing giant Gojek. Biggest country you never think about: Indonesia. Fourth biggest by population. An island nation where everyone has a cellphone and most everyone has Internet access. Gojek are way more than a ride-hailing service, they're on the path to be an "everything app" like WeChat. This makes sense.

July 2020: Meet BukuWarung, the bookkeeping app built for Indonesia’s 60 million "micro-merchants". BukuWarung, meet Gojek. Imagine signing up 600,000 merchants in one year!

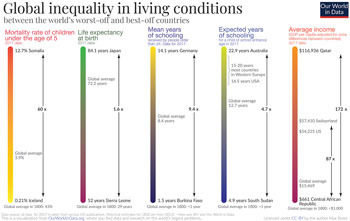

July 2020: Visual Capitalist: The global inequality gap. As always from them, great data, and great to see visually. July 2020: Visual Capitalist: The global inequality gap. As always from them, great data, and great to see visually.

BTW Indonesia's 280M people are near the top of the third world in most categories. Ahead of China (#1) and India (#2) in many of them.

One of those point-in-time things, Sept 2020: VDH: The news as we once knew it is dead. "Why? ... Picture yourself in your 50s in a job where you've spent 30 years working your way to the top, to the cockpit of power … only to discover that you're presiding over a dying industry. The Internet and alternative media are stealing your readers, your advertisers and your top young talent."

Nov 2020: via Digg: A Visualization Of The Largest Cities By Area Around The World. It's amazing how densely we live! Nov 2020: via Digg: A Visualization Of The Largest Cities By Area Around The World. It's amazing how densely we live!

Continuing the Indonesia theme: Jakarta packs 10M people into [only] 256 sq miles.

Dec 2020: Capturing the great conjunction. As Jupiter and Saturn crossed paths closely, the ISS crosses between them. Wow. Dec 2020: Capturing the great conjunction. As Jupiter and Saturn crossed paths closely, the ISS crosses between them. Wow.

Talk about topical, Dec 2020: Matt Ridley: Why mRNA vaccines could revolutionise medicine. Note this article was written before all the Covid vaccines became available in Spring 2021. I remember reading it thinking, hmmm...

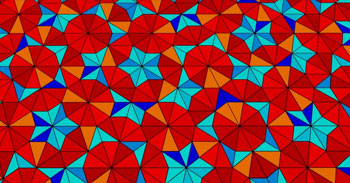

![[the game of] Life at 50](images/2301/Life-at-50.jpg) Dec 2020: jwz: [the game of] Life at 50. A pretty interesting look back at a pretty interesting "game". I love the Marvin Gardner remembrance, remember Scientific American's Mathematical Games column? Dec 2020: jwz: [the game of] Life at 50. A pretty interesting look back at a pretty interesting "game". I love the Marvin Gardner remembrance, remember Scientific American's Mathematical Games column?

timeless, Jan 2021: Matt Ridley: the folly of renewable energy. "If you judge by the images used to illustrate reports about energy, the world now runs mainly on wind and solar power. It comes as a shock to look up the numbers. In 2019 wind and solar between them supplied just 1.5 percent of the world’s energy consumption. Hydro supplied 2.6 percent, nuclear 1.7 percent, and all the rest — 94 percent — came from burning things: coal, oil, gas, wood, and biofuels."

Jan 2021: Stephen Wolfram: from Assembly Language to a Nobel Prize. On the calculations which come from Feynman diagrams...

Feb 2021: Joshua Newman: how to be lucky. "Most of us think that luck just happens (or doesn't) but everyone can learn to look for the unexpected and find serendipity." I so believe this.

Feb 2021: Fry's Electronics closes permanently nationwide. A sad moment, but inevitable and long-expected. I spent many a pleasant hour browsing their shelves... Feb 2021: Fry's Electronics closes permanently nationwide. A sad moment, but inevitable and long-expected. I spent many a pleasant hour browsing their shelves...

June 2021: Bill Gurley: Customers Love Free Stuff … But That’s Not Your Problem. I love Bill's posts, his vision is truly above the crowd. This was posted at a time of "hot" IPOs, not now, but these times will be back.

---- so yay, we made it halfway from 2020 to today ---

And to wrap up:

July 2021: The Indonesian government announced that they would deliver free telemedicine services for COVID-19 patients with mild symptoms across the country. Via Bercie Mesko, who comments: "It's only possible for them as they have startups that did the hard work."

|

|

So I linked to an article in Salon criticizing ChatGPT for being "artificial" intelligence. (Actually, they didn't put it that cleverly.) And I noted, "I think we should ask ChatGPT to write an article, in the style of Salon, about how AI is not really intelligent."

So:

|

|

Write an article, in the style of Salon magazine, about how ChatGPT isn't truly intelligent

|

|

Chatbots have come a long way in recent years, with advancements in machine learning and natural language processing (NLP) allowing for increasingly human-like interactions. One of the most popular chatbot models is GPT-3, developed by OpenAI. While GPT-3 can certainly hold its own in conversations and even generate creative writing, it is important to remember that it is not truly intelligent.

It is important to note that true intelligence is a complex concept and is not fully understood. However, a key component of intelligence is the ability to understand and process new information, and make decisions based on that information. GPT-3, like other chatbots, relies heavily on a large dataset of pre-existing text to respond to prompts. It has been trained on a wide variety of texts, including books, articles, and conversations. This allows it to understand the nuances of language and respond in a way that seems human-like.

However, GPT-3 lacks the ability to truly understand the world in the way that humans do. It does not have knowledge of the world outside of the text it has been trained on. It does not have consciousness, the ability to perceive, think, and feel like a human. It does not have the ability to make decisions based on experience, or to process new information in the way that humans do.

One of the key limitations of GPT-3 and other chatbots is that they do not understand context. They can respond to a prompt with a coherent and seemingly relevant answer, but they do not understand the meaning behind the prompt or the context in which it was asked. For example, if you ask a chatbot, "What is the capital of France?" it will likely respond with "Paris." But if you ask, "I'm thinking of a city in Europe that is the capital of France," the bot may not be able to understand the context of the question and give a coherent answer.

Another limitation is that GPT-3 does not have the ability to reason, which is crucial for understanding the world. It does not have the ability to connect new information with existing knowledge, or to make deductions based on that information. This limits its ability to solve problems and understand complex concepts.

In conclusion, GPT-3 is a powerful tool for natural language processing and can hold its own in conversations and creative writing. However, it is important to remember that it is not truly intelligent. While it can simulate human-like responses, it lacks the ability to truly understand the world and make decisions based on that understanding. As we continue to make advancements in AI, it is important to keep this in mind and recognize the limitations of current technology.

|

I don't know if ChatGPT truly captured Salon's style - the clickbait gotcha is missing - but that's not bad. Arguably better than what they wrote...

|

|

Today was cold but not wet. Another good day for coding, Zwifting, and blogging. Today was cold but not wet. Another good day for coding, Zwifting, and blogging.

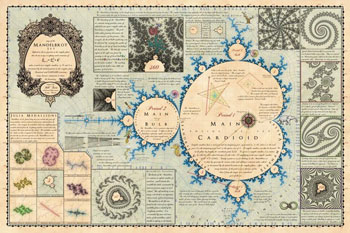

Spent most of the day trying out variations on this diagram. As usual there appears to be a trade-off between easy and fast. So be it.

Molly White: In 2022, web3 went just great. [via Miguel de Icaza] Certainly a lot of well-publicized hacks and other disasters. But the overall lack of value creation hasn't sunk in.

Reading about the FTX implosion I'm stuck by the circular nature of so much of the crypto investment. Bloomberg notes: crypto banks owe themselves money.

Meanwhile: Coinbase are laying off 1,000 people. My first reaction: Coinbase has 1,000 people? What do did they do all day?

Bill Gurley: On the Road to Recap. He Tweeted "I wrote this five years too early". I hope a lot of entrepreneurs read it back then, otherwise they'll wish they had...

Boing Boing: In a challenge to Google, Microsoft is adding ChatGPT to Bing. Lots of buzz about this, including strong rumors of a further Microsoft investment in OpenAI. It's interesting to think about what this would look like. Your chats get turned into a search predicate? Or the engine does a search, and renders the results for you as chat? Boing Boing: In a challenge to Google, Microsoft is adding ChatGPT to Bing. Lots of buzz about this, including strong rumors of a further Microsoft investment in OpenAI. It's interesting to think about what this would look like. Your chats get turned into a search predicate? Or the engine does a search, and renders the results for you as chat?

Miguel de Icaza: to my surprise, jwz's 'CADT model' applies even to large organizations. Maybe he should have written especially.

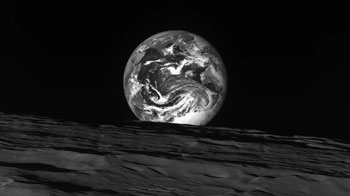

CNET: South Korean Moon Mission Delivers Devastatingly Gorgeous Earth Views. Indeed. What a great time for space! CNET: South Korean Moon Mission Delivers Devastatingly Gorgeous Earth Views. Indeed. What a great time for space!

Lawrence Person: dear restaurants, shove your damn QR codes. This wouldn't matter so much if the online menus were simply renders of the paper menus, but often they're inferior and incorrect subsets.

This is way more interesting than you might think: A Civil Engineer Explains The Difference Between Spillway Gates, And What They Do. Who doesn't like giant dams, and who hasn't wondered what happens when there's too much water? I love his 3D printed models, too. This is way more interesting than you might think: A Civil Engineer Explains The Difference Between Spillway Gates, And What They Do. Who doesn't like giant dams, and who hasn't wondered what happens when there's too much water? I love his 3D printed models, too.

Agree entirely; Wired: Andor is a master class in good writing. As enjoyable as the original Star Wars and the Mandalorian, and for the same reasons ... fun characters, great stories. I especially like the inside baseball on the Empire side. The force is strong with this one. Agree entirely; Wired: Andor is a master class in good writing. As enjoyable as the original Star Wars and the Mandalorian, and for the same reasons ... fun characters, great stories. I especially like the inside baseball on the Empire side. The force is strong with this one.

Bonus observation: the special effects are great too; how excellent is that water planet prison?

More, sooner, please: Nuclear power catching the eyes of Silicon Valley's tech billionaires. I have always been mystified why this hasn't happened sooner. EVs are all very exciting but still largely fossil fuel powered. And solar and wind are weak and worthless alternatives. More, sooner, please: Nuclear power catching the eyes of Silicon Valley's tech billionaires. I have always been mystified why this hasn't happened sooner. EVs are all very exciting but still largely fossil fuel powered. And solar and wind are weak and worthless alternatives.

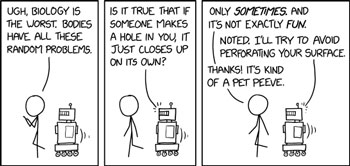

xkcd: Biology vs Robotics. So great. xkcd: Biology vs Robotics. So great.

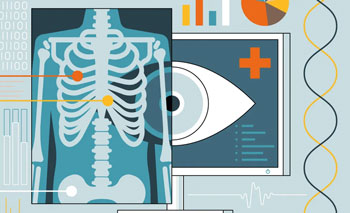

Becker's: Amazon will be the biggest threat to health systems' core business. Yep. Amazon is the biggest threat to everyone's business, but healthcare will be one of its largest conquests. The key here is the value add of consumerism.

Oh but wait: Google introduces chatbot for healthcare providers. Looks like it's Microsoft + OpenAi vs Google + DeepMind, and so what will Amazon do? Stay tuned :)

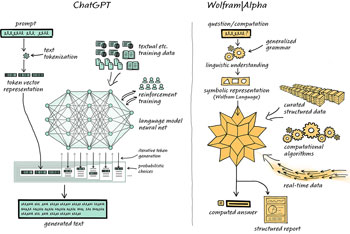

Well this is interesting: Wolfram|Alpha as the way to bring computational knowledge superpowers to ChatGPT. This article has some pretty interesting examples where ChatGPT is wrong, and how Wolfram|Alpha can be a resource. Kind of like a human holding a calculator. Well this is interesting: Wolfram|Alpha as the way to bring computational knowledge superpowers to ChatGPT. This article has some pretty interesting examples where ChatGPT is wrong, and how Wolfram|Alpha can be a resource. Kind of like a human holding a calculator.

BTW have to say for the Nth time, Wolfram|Alpha is amazing and weirdly off the radar.

|

|

Good morning! It is Friday. The 13th. You have been warned :) Good morning! It is Friday. The 13th. You have been warned :)

For those who found this post on Facebook, yay. If you like the links from there to here, or don't, please say so... Right now survey is running 53% yes and only 13% no, but it's a small sample...

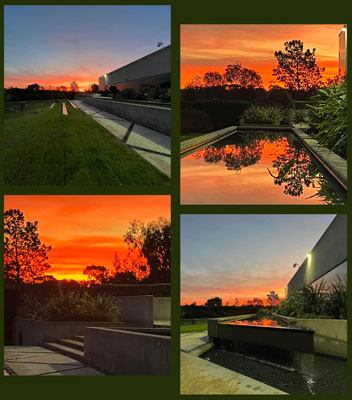

Thanks to Matt Struble for this awesome sunrise series from this morning! I wasn't up, but seems like I should have been...

Red sky at night, sailor's delight, red sky in morning, sailor take warning ...

<rant>I love the way Apple's iCloud sync finally works, and any pic I take on my iPhone or iPad is automatically synced to my PC. (I say finally because it was not always so...) But I hate dislike the way it takes so long. Why is the polling interval 15 min? Half the time I end up emailing a specific pic from my phone to my PC because I'm tired of waiting for the auto-sync. </rant>

Have been seriously loving Zwifting. Three days ago, rode in France (that's Mont St Michel at left). Two days ago, Japan. Yesterday, New York central park*. Meanwhile it was like zero here outside. If you're a cyclist you should try it, and if you want to do a ride, let me know. I'm about 2w/kg... Have been seriously loving Zwifting. Three days ago, rode in France (that's Mont St Michel at left). Two days ago, Japan. Yesterday, New York central park*. Meanwhile it was like zero here outside. If you're a cyclist you should try it, and if you want to do a ride, let me know. I'm about 2w/kg...

* BTW love the way the Zwift people have reimagined Central Park with translucent aerial bike paths; great views, and adds some vertical. NY city planners take note :)

Another thing I'm loving is Feedly. SharpReader, I hardly knew ye (well, except for the last 20 years :). Being able to read posts on my iPad and then having them relayed to my PC automatically has been game changing. Another thing I'm loving is Feedly. SharpReader, I hardly knew ye (well, except for the last 20 years :). Being able to read posts on my iPad and then having them relayed to my PC automatically has been game changing.

Blog inside baseball - made some changes to my archive. Hope you like them. I *still* think this way of archiving old posts is the best I've seen. Easy to find stuff and easy to browse. YMMV.

And so now we have the ThinkPhone, a Lenovo brand extension to the ThinkPad laptop line they bought from IBM. Kinda cool; I'd be tempted if I was an Android person. And so now we have the ThinkPhone, a Lenovo brand extension to the ThinkPad laptop line they bought from IBM. Kinda cool; I'd be tempted if I was an Android person.

CNet is experimenting with an AI Assist. Well, why not? It might be more insightful and certainly less biased than their human reporters. And there will still be human editors.

Medium creates it's own Mastodon instance. Huh, interesting. I'm not a Medium follower or user, but seems like this makes sense for those who are.

BTW haven't been able to figure out how to identify inbound links from Mastodon. I'm guessing near zero. If you got here via Mastodon, can you let me know?

Cool! The James Webb telescope's first confirmed exoplanet is 99 percent the size of Earth. It's fiendishly hard to find exoplanets, they are not directly "visible", but have to be deduced from their gravitational effects on nearby stars. The smaller they are the harder they are to find, which is why most exoplanets found to date are gas giants. Cool! The James Webb telescope's first confirmed exoplanet is 99 percent the size of Earth. It's fiendishly hard to find exoplanets, they are not directly "visible", but have to be deduced from their gravitational effects on nearby stars. The smaller they are the harder they are to find, which is why most exoplanets found to date are gas giants.

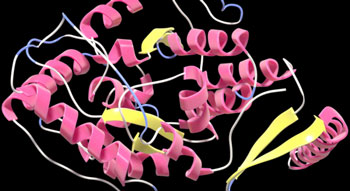

Visual Capitalist: The Most Important Science Headlines of 2022. Excellent, I love this. And yes I know you can't read them here, you have to click through :) Hard to pick the winner - but DeepMind's announcement of AlphaFold might end up being the most important... Visual Capitalist: The Most Important Science Headlines of 2022. Excellent, I love this. And yes I know you can't read them here, you have to click through :) Hard to pick the winner - but DeepMind's announcement of AlphaFold might end up being the most important...

From NASA: why isn't every year the warmest year on record? They explain, but you get the feeling they are rooting for climate change to be "real". Weird. They're a scientific organization, why not just stick to the science? Yeah I know the answer.

This is what NASA should be doing: TESS discovers planetary system's second Earth-size world.

Meanwhile: SpaceX flexes might with simultaneous Starship, Falcon 9, Falcon Heavy, Dragon operations.

Space is not easy: Virgin Orbit blames launch failure on upper stage anomaly. The first ten SpaceX launches didn't go perfectly either. It takes time and lots of hard work. Glad they are trying and wish them luck!

At this point no surprise: Tesla beats BMW to become the #1 in luxury sales. Ten years ago, who woulda thought? At this point no surprise: Tesla beats BMW to become the #1 in luxury sales. Ten years ago, who woulda thought?

The pic at right is a BMW EV, highly rated. A friend of mine just got one - he was a Tesla Model S owner, wanted something new - he likes it a lot but every phrase is a comparison to Tesla. In my eye it is not pretty.

Sure go ahead and make fun of Elon Musk, but ... SpaceX and Tesla. And now you think he can't run Twitter? Stay tuned.

You've all heard Jeff Beck has passed on... especially poignant for me was Ottmar Liebert's tribute. "For me he was THE best. His tone, his phrasing, his bending… Damn, he was so good." It's notable how many great guitarists are singing his praises. For me he was good but not in the Stevie Ray Vaughan, Eddie Van Halen, Robin Trower class. YMMV.

xkcd hits another one out of the park: Outdated periodic table. xkcd hits another one out of the park: Outdated periodic table.

Okay, so much for a filter pass ... now onward to more CUDA coding!

|

|

Yesterday I voyaged up to Santa Barbara to check on my boat, and the Yacht Club; you've maybe read about the storms last week, and the washing away of the beach, and the silting of the harbor mouth. It's quite a thing; last time there was a storm like this was 1983. Yesterday I voyaged up to Santa Barbara to check on my boat, and the Yacht Club; you've maybe read about the storms last week, and the washing away of the beach, and the silting of the harbor mouth. It's quite a thing; last time there was a storm like this was 1983.

So the good news: my little boat is fine - it lives in the harbor - just had to adjust it on its air bed, and take down the Christmas decorations (!) - and the club is fine too - turns out it's waterproof, and designed for this. (If you're not familiar the club usually sits on a beach, about 50 yards from the surf, and now that beach is gone.)

The bad news is that sailboat racing has been suspended until they can dredge the harbor mouth. The picture at left was taken last week; that's the end of the breakwater, and the harbor mouth is now so shallow that people are surfing over it! The bad news is that sailboat racing has been suspended until they can dredge the harbor mouth. The picture at left was taken last week; that's the end of the breakwater, and the harbor mouth is now so shallow that people are surfing over it!

The weather continues to be so crummy that sailing probably wouldn't have been much fun anyway. In a few weeks maybe the harbor mouth will be open again, the sun will be out, and we'll be back to our normally scheduled Southern California winter. Stay tuned!

|

|

So I did my first Zwift group ride, in Neokyo on the Makuri Islands. (No, don't bother looking this up on Google Earth, these are made up islands and Neokyo is inspired by Tokyo but doesn't exit.) It was pretty cool, with many of the same elements found in any group ride. So I did my first Zwift group ride, in Neokyo on the Makuri Islands. (No, don't bother looking this up on Google Earth, these are made up islands and Neokyo is inspired by Tokyo but doesn't exit.) It was pretty cool, with many of the same elements found in any group ride.

First, the pace was high, much higher than I would have maintained riding alone. The ride was paced by an NPC, an electronic rider (shown as neon green in the picture) who maintained 3km/w the whole time. Fortunately Zwift simulates drafting, so with a lower-than-that watt output I was able to stick with the group. Anytime I got slightly ahead the group caught me - just like in a real group ride :) - and anytime I fell behind I had to work hard to catch it again - just like in a real group :/. I love the way Zwift simulates the left/right up/down nature of the group, riders moving around, although in Zwift you can't hit anyone and crash, you just sort of glide through them. As the group overtook single riders we parted slightly to let them through but mostly just rode right through them; no harm no foul. The "ride ons" and other jabber in the group were also fun, and some people had even mastered commenting while riding, which I have not.

Zwift uses a cool architecture wherein you can have a computer hosting your ride, and use an app on your phone as a "companion" to guide the ride. The phone can be on your handlebar or in your pocket, and let's you take quick actions like sending messages to riders around you. I'm still figuring out how to do that while over 2w/kg but it's nicely done, a good use of all the tech.

With lots of rain outside already looking forward to my next ride inside later today. Ride on!

|

|

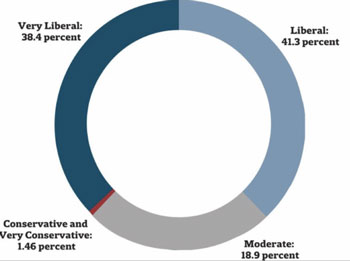

Wow, rain rain rain. They tell me we still have a drought, but somehow I doubt it; check out the table of California lake water levels at right. (Click to enbiggen.) Getting it all at once like this is going to cause problems though... Wow, rain rain rain. They tell me we still have a drought, but somehow I doubt it; check out the table of California lake water levels at right. (Click to enbiggen.) Getting it all at once like this is going to cause problems though...

Some pretty large lakes are up a lot - Shasta 20+ feet! - but the one that caught my eye was Tahoe up one foot ... that is a lot of water.

Thanks Peter Simons for the chart. Bonus observation: Newsweek still exists?

Fascinating: How Elon Musk's satellites have saved Ukraine and changed warfare. Fascinating: How Elon Musk's satellites have saved Ukraine and changed warfare.

Am just rereading Red October for the hundredth time and struck again about the importance of communications in war.

NYTimes: The Party's over for us. Where do we go now? Conservatives debate the future of the Republican party. I keep hoping there will be a place for conservative thinking in the Democratic party ... maybe I'm a dreamer, but I'm not the only one.

I just rewatched The Big Short. What an excellent movie. (Based on an excellent book, which it pretty faithfully follows.) The tragic economic crash of 2008-09 already seems like ancient history, right? But so many echos of that in the current crypto bubble. I just rewatched The Big Short. What an excellent movie. (Based on an excellent book, which it pretty faithfully follows.) The tragic economic crash of 2008-09 already seems like ancient history, right? But so many echos of that in the current crypto bubble.

When it became obvious (to a few) that mortgage-backed securities like CDOs were going to crash, there was no way to short them; they had to create credit default swaps so they had a way to do it. It's now obvious (to a few) that crypto tokens are such a house of cards; is there any way to short them? Do we have to create one?

I was reading old posts and came across this one from 2020: Tim Bray: I don't believe in Bitcoin. He noted the lack of adoption by geeks as a red flag. He was and is right. The next killer app on the blockchain will be the first one.

Bonus note: back then I was blogging at Buck's in Palo Alto. Just after InTouch was acquired by Teladoc, and just before the Covid pandemic hit. Wow. Only three years ago but ... wow.

Charlie Munger on remote work: Those people are never going back. Nope. Charlie Munger on remote work: Those people are never going back. Nope.

My iTunes Match subscription is up for renewal. I hardly ever use iTunes anymore. I listen to music via Spotify or LiveOne (formerly LiveXLive, formerly Slacker; it came with my Tesla, I started using it, and still do...) I'm wondering if I still need it? And what will happen if I cancel? LMK if you have a thought... My iTunes Match subscription is up for renewal. I hardly ever use iTunes anymore. I listen to music via Spotify or LiveOne (formerly LiveXLive, formerly Slacker; it came with my Tesla, I started using it, and still do...) I'm wondering if I still need it? And what will happen if I cancel? LMK if you have a thought...

Marc Andreessen: Pure, absolute, indescribable magic. It kind of is, right?

In 2015 I linked Brad Feld: Hollywood's massive miss on strong AI. At that time (and maybe still) Minority Report was thought to be the best attempt to represent "strong AI" in a movie. Now we have ChatGPT, which is pretty strong. Will there be a movie made? Yes. Will it be a good one? Um...

Okay. I read Hail Mary, the new book by Andy Weir, author of The Martian, and thought it was excellent. And inevitably, it will be made into a movie. But starring Emma Stone as Rocky? Um what? Rocky is a 5-legged alien spider made of rocks. He "speaks" with musical sounds. I get the desire to pair her with Ryan Gosling but I do not get this casting. I'm most curious to see. Okay. I read Hail Mary, the new book by Andy Weir, author of The Martian, and thought it was excellent. And inevitably, it will be made into a movie. But starring Emma Stone as Rocky? Um what? Rocky is a 5-legged alien spider made of rocks. He "speaks" with musical sounds. I get the desire to pair her with Ryan Gosling but I do not get this casting. I'm most curious to see.

This flying electric boat wants to be the Tesla of the sea. So be it. This flying electric boat wants to be the Tesla of the sea. So be it.

While I was in Monaco last October - competing in a regatta, yes, that's a story I must tell - I noted that virtually all of the tenders for those huge yachts berthed there were electric. The wave of the future.

Wow, happy 20th birthday to Safari! Seriously that makes me feel old. I so remember when it came out, a real "who ordered that" moment. At that time the competition was IE (!), and Firefox (remember them? :)

If you are determined to mine Bitcoin, here's a good solution for you: a Bitcoin mining space heater. I'm pretty sure Satoshi et al never anticipated electricity cost to be a limiting factor on adoption, but here we are.

The WSJ: CES 2023: All the Cool, Crazy and Cute Tech We Found in Las Vegas. In case you missed it, you didn't miss much. This list is notable for what is not on it - anything groundbreaking. Maybe the tech for 3D without glasses, I'll put my marker down for that. The WSJ: CES 2023: All the Cool, Crazy and Cute Tech We Found in Las Vegas. In case you missed it, you didn't miss much. This list is notable for what is not on it - anything groundbreaking. Maybe the tech for 3D without glasses, I'll put my marker down for that.

Did you know? The WSJ is by far the largest circulation newspaper in the world. Visual Capitalist: The top 25 newspapers by circulation. I didn't find this surprising, but I was kind of amazed that the circulation was only 697K. How the mighty have fallen. The LA Times is 6th on the list, at 142K. Did you know? The WSJ is by far the largest circulation newspaper in the world. Visual Capitalist: The top 25 newspapers by circulation. I didn't find this surprising, but I was kind of amazed that the circulation was only 697K. How the mighty have fallen. The LA Times is 6th on the list, at 142K.

Well onward into the day ... lots of rain, no sailing, no cycling (except Zwifting later), hence, coding!

|

|

Good evening. Still raining, but sun on the horizon. Yay. Watching football - big queue left over from the weekend - Zwifting, coding, and of course, blogging... Good evening. Still raining, but sun on the horizon. Yay. Watching football - big queue left over from the weekend - Zwifting, coding, and of course, blogging...

Can't decide whether to synthesize a title for filter pass posts or not. Guess, not?

A gift from my daughter Alex: Exceptionally Bad Dad Jokes. They are. "When does a joke become a Dad joke? When it becomes apparent." You have been warned.

News you might use, from Shelly Palmer: the alphabet soup of video monitors. A perfect example of how scale reduces cost; you can get a great, huge monitor for <$1,000 today, and it's good enough; the best might be better, but it's at the margin now. News you might use, from Shelly Palmer: the alphabet soup of video monitors. A perfect example of how scale reduces cost; you can get a great, huge monitor for <$1,000 today, and it's good enough; the best might be better, but it's at the margin now.

So cool: Wired reports a teenager solved a stubborn prime number 'look-alike' riddle. Have you ever heard of Carmichael numbers before? Me neither. But now we know there are infinitely many of them, and for any X there's always one between X and 2X.

This is pretty great, from The Discoverer: 10 natural wonders that prove magic exists. Not sure they are actually magic, but certainly awe-inspiring. And all on my bucket list. This is pretty great, from The Discoverer: 10 natural wonders that prove magic exists. Not sure they are actually magic, but certainly awe-inspiring. And all on my bucket list.

The one which might actually be magic is the sailing stone racetrack playa. No satisfactory scientific explanation has been made for this yet. The one which might actually be magic is the sailing stone racetrack playa. No satisfactory scientific explanation has been made for this yet.

This is hilarious: Avatar and the Papyrus typeface. Ryan Gosling is perfect.

Too bad I never went: Noma is closing its doors. (Headline should have been, "Noma no more".) Somehow the strain of keeping one of these top restaurants at the top tells. I hope they don't end up like The Menu :) Too bad I never went: Noma is closing its doors. (Headline should have been, "Noma no more".) Somehow the strain of keeping one of these top restaurants at the top tells. I hope they don't end up like The Menu :)

For some reason our local theater had billed The Menu as a comedy, so we went. Pretty interesting, starts as a great sendup of high-end dining, but then...

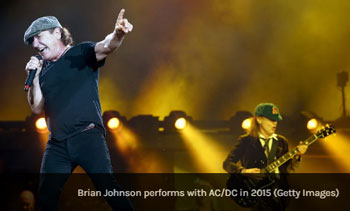

Washington Free Beacon: the lives of Brian. (... Johnson, frontman for AC/DC afetr Bon Scott died.) "The big rock acts have become tribute bands to their younger selves." That's kinda true, but but not always. We recently saw Robert Plant and he was great, but in no way a tribute band for Led Zeppelin. Then again he had quite a solo career after Zep. Washington Free Beacon: the lives of Brian. (... Johnson, frontman for AC/DC afetr Bon Scott died.) "The big rock acts have become tribute bands to their younger selves." That's kinda true, but but not always. We recently saw Robert Plant and he was great, but in no way a tribute band for Led Zeppelin. Then again he had quite a solo career after Zep.

Interesting question: Was Salesforce's acquisition of Slack a bust? Not sure how the numbers worked out, but they definitely paid a lot: $27B! How much revenue would it have had to generate annually to support that price? Or asked another way, how much increase in the value of Salesforce stock? Yet again, it was primarily a strategic move; Microsoft Teams was emerging as an important competitor, and Salesforce maybe felt they had to be in that market. Most development teams I know love Slack more, but many are being asked to use Teams because it's part of Office.

Popular Science considers: Dark Matter, Jupiter's Moons, and more: what to expect from space exploration in 2023. They had me at Dark Matter. And the picture of the SpaceX Dragon too. Popular Science considers: Dark Matter, Jupiter's Moons, and more: what to expect from space exploration in 2023. They had me at Dark Matter. And the picture of the SpaceX Dragon too.

Sadly: 'The Science' is ruining science. "Perhaps the most startling aspect of the story is the sub-headline Nature used: 'No One Knows Why.'" Well I'll be 'no one': political correctness has intruded. Sadly: 'The Science' is ruining science. "Perhaps the most startling aspect of the story is the sub-headline Nature used: 'No One Knows Why.'" Well I'll be 'no one': political correctness has intruded.

Interesting that this was published in Nature, which itself is exhibit A of this trend...

Powerline: The Science isn't scientific. In regards to Covid...

Real science: SpaceX USSF-16 Mission. So cool. Yes, you must watch! Real science: SpaceX USSF-16 Mission. So cool. Yes, you must watch!

Elon Musk: And that's how we will land on Mars. I haven't gotten tired of watching two stages land together side-by-side yet. Still feels like I'm watching a good movie.

Apropos: SpaceX signs agreement with US National Science Foundation to prevent Starlink’s interference with astronomy. "Elon Musk, the CEO of SpaceX, said SpaceX would ensure that Starlink has no material effect on discoveries in astronomy. 'We care a great deal about science'" Well, good.

Wow. Sam Bankman-Fried's secret 'backdoor' discovered, FTX lawyer says. "Sam Bankman-Fried instructed his FTX cofounder Gary Wang to create a "secret" backdoor to enable his trading firm Alameda to borrow $65 billion of clients' money from the exchange without their permission, the Delaware bankruptcy court was told Wednesday." That's B as in Billion. Amazing.

Brad Feld: Reflecting on Ponzi Schemes. So yeah, but more interesting, he followed with I don't hate crypto. I think he took a lot of inbound from fellow investors who don't want the secret to be revealed.

News I hope you won't have to use: How to Use the iPhone 14’s Emergency Satellite SOS. Interestingly it only works if you are off the grid, so it's hard to test. But if you need it, you need it. News I hope you won't have to use: How to Use the iPhone 14’s Emergency Satellite SOS. Interestingly it only works if you are off the grid, so it's hard to test. But if you need it, you need it.

This was not an easy feature to add, I wonder why they did it? Will people buy new iPhones to get this feature? Huh.

Wow, what a long post! I need to stop blogging now. Okay one more...

This tiny little capybara travels around via surfboard, except the surfboard is a big capybara. You're welcome :) This tiny little capybara travels around via surfboard, except the surfboard is a big capybara. You're welcome :)

|

|

<rant> <rant>

So, cookies. Since the dawn of Internet time, websites have been able to work around the inherently stateless mode of web browsing by sending small bits of information called "cookies" to web browsers. Later, when the browser returns to the site (could be 1s later), the cookies are sent back to the site, enabling state to be maintained. A good solution.

Later, people began exploiting cookies in bad ways. A second website belonging to, say, an advertising company, could send cookies, then later have them sent back, and this allows them to track you. And/or, in a variation, the first website could share your information with the second website, as part of presenting ads, or for any other reason. A bad problem.

And so this somewhat arcane technical issue hit the radar of regulators, and they passed laws: now, in order for a website to send you a cookie, you have to agree to it first. And so now every website asks you, annoyingly, if you're okay with cookies, and you say "yes" and life goes on. After you say "yes" all the tracking/sharing stuff that could happen before can still happen. If you say "no", the website probably won't work and you can't use it. So this is a terrible non-solution to the problem.

What can be done? Well first, we shouldn't ask legislators to solve this kind of problem. They don't understand it, and they pass dumb laws which make life more difficult but don't solve the problem. We need a simple technical solution to a simple technical problem.

What if browsers had a global option to accept cookies? You could turn it on or off, depending on your preference. Oh wait - they already had that option. So no laws were actually needed. But yeah if you said "no" to cookies, the website probably didn't work.

What we really want is an option that says, "don't send cookies from anyone else, and don't share my information with anyone". That would be an easy option to set, it would be sent to every website in the HTTP header, and then it would be on the website to accede to your request. This is what has happened on phones, for example; you are asked whether it's okay to share your information (once!), and if you say no, then it's no. Every phone app doesn't have to ask every time. No new laws needed.

In the meantime, we all have to say "yes" every time we visit a website. How great is that? And it doesn't solve the problem, because we always say "yes". Well it isn't our biggest problem, but it's another example of a dumb law that makes our lives worse. Onward!

</rant>

|

|

Happy Lunar Rabbit Year! Happy Lunar Rabbit Year!

As helpfully explained in this article in the South China Morning Post, this holiday is celebrated in China, Indonesia, and many other countries; over 2B people celebrate the new year this way! And so now we are in the Year of the Rabbit.

These old traditions are cool - I love thinking about what people were thinking about when they were created. They didn't understand about the Sun and the Moon and the Planets and the Stars, but it was easy to think they ruled our lives. Any sufficiently advanced technology is indistinguishable from magic.

And I love thinking about the fact that we US people are so Western centric. The face that billions of people - way more than us - are celebrating a new solar orbit differently doesn't enter our thinking. (Well, most of the time it doesn't...)

新年好!

|

|

A quick filter pass ... A quick filter pass ...

I'm having a weird problem with my mouse - it's double-clicking at random. After nearly five years and approx 1B clicks, the clicker is breaking. So be it. What's interesting is how many "hidden" features are uncovered by this random behavior. There's no standard for revealing what might happen on a double click (and actually, with the latest no-affordances crummy design ethos, a disappearing standard for revealing what might happen on a single click too). So you do it, accidentally, and see what happens ... it does not however blog automatically. Yet.

Today's picture is lifted from the Tour Down Under cycling race, first of the season, and first time in three years this event is being held. Yay, the "normal" pro cycling calendar is back.

Lots of non-news today. There was a report about Apple's AR/VR headset, and everyone went crazy parroting everyone else's report about it. And it's all a rumor, nothing real. Shows you that anytime you know about something in the news, you know they got it wrong. Lots of non-news today. There was a report about Apple's AR/VR headset, and everyone went crazy parroting everyone else's report about it. And it's all a rumor, nothing real. Shows you that anytime you know about something in the news, you know they got it wrong.

(BTW this is not a picture from Apple, just someone's guess ... but feel free to share!)

Ann Althouse: Honoring Martin Luther King Jr. with a hilariously/tragically bad monumental sculpture. It is indeed tragically bad. I honestly debated whether to post this picture - this is a G-rated blog - but decided you would want to see it if you haven't already. WTF, right? Ann Althouse: Honoring Martin Luther King Jr. with a hilariously/tragically bad monumental sculpture. It is indeed tragically bad. I honestly debated whether to post this picture - this is a G-rated blog - but decided you would want to see it if you haven't already. WTF, right?

Dave Winer is working on a new tool called Feedland, "a feed management system for individuals and groups". I don't get it yet. I do think RSS is the bees knees. Not sure what a feed management system would do for me. But Dave often sees around the corner, I'm staying tuned.

Now becoming apparent: Twitter was the ultimate cancellation machine.

Meanwhile, and interestingly, a lot of third-party Twitter clients have had their credentials revoked, allegedly for violating Twitter's terms of service. Reading between the lines, likely they displayed Tweets without ads, thereby depriving Twitter of revenue. So yeah, it sucks for them, but so be it. I would agree their communication has been curiously lacking; the Elon Musk regime have mostly explained the changes they've made.

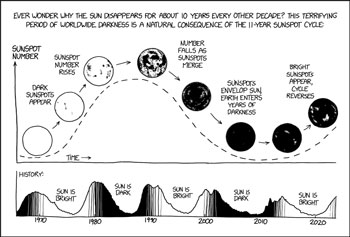

xkcd: Sunspot Cycle. Feels like I link every new post, but then again every new post is so good. xkcd: Sunspot Cycle. Feels like I link every new post, but then again every new post is so good.

This you have to watch: Drone Dives the Full Height of the Burj Khalifa. Amazing. [ via Kottke ] This you have to watch: Drone Dives the Full Height of the Burj Khalifa. Amazing. [ via Kottke ]

I have not heard, does this building move? In the wind? What is it like to work on the higher floors?

Ottmar Liebert re-visits CDs. "the album in a store is simply a container for the ideas of the musicians and producers". The challenge for artists today is how to capture that value, when the incremental cost of distribution is $0 online. His last ten albums in the last ten years have been distributed online, and he's still doing it, so somehow it must be working...

Interconnected: filtered for ants and laws. A meanderingly thoughtful post:

- Let’s say we could chat with ants. Could we trade with them? What would we want from them?

- Let’s say you want to stop eating cows and chickens, and instead eat insect-derived protein ... Is that ok?

- Corporate insecthood. In short: people treat almost everything as people, at least a bit, including companies.

- A special court in Ancient Greece for holding accountable lifeless things

|

|

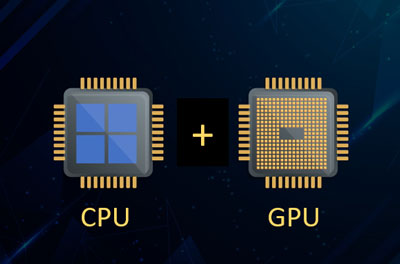

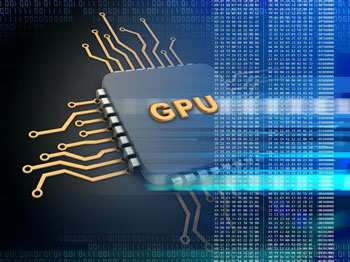

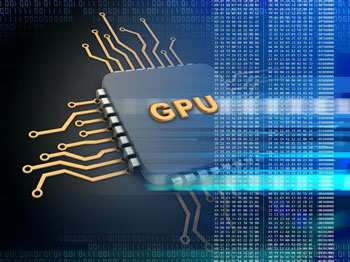

Hi all, this is the first of a series of posts about CUDA and GPU acceleration. (Next post here) Hi all, this is the first of a series of posts about CUDA and GPU acceleration. (Next post here)

For some time I've been aware of GPU acceleration, and NVidia, and CUDA, but it was a bit of a black box. Recently I've been working on a cool project which has enabled me to double-click on this to understand what's inside the box.

Maybe it would be good to start with an introduction: what is a GPU, why GPU acceleration, who are NVidia, and what is CUDA.

What is a GPU

GPU is an acronym for Graphics Processing Unit. The diagram at right shows the overall architecture of a modern workstation (aka PC). GPU is an acronym for Graphics Processing Unit. The diagram at right shows the overall architecture of a modern workstation (aka PC).

There's a CPU, with several "cores" (maybe 10 or so), and a GPU, with many many cores (maybe 100 or so). The cores on a GPU are often called Stream Processors, or SPs, for reasons that will be apparent a bit later.

In the parlance of GPUs, the CPU is referred to as the Host, and the GPU is called the Device*.

* A number of terms in the GPU world are "overloaded"; they mean one thing in general, but a specific different thing in this world. I'll try to call out the specific uses of these terms as we go along.

In addition to the CPU cores the Host has Main Memory (maybe 16GB or so). This memory is somewhat more complicated than a simple box, but for now we'll treat it as a big blob of storage for data. The Device also has its own Graphics Memory (maybe 16GB or so, maybe more). Again, it's more involved than a box, but to start we'll treat it as such. The Device also has a video interface for connecting one or more monitors. This was the original reason for the existence of GPUs, but as we'll see more recently they've been used for other purposes.

The CPU and GPU (or we shall say Host and Device) communicate over a Bus. The Bus is fast (currently about 200MB/s), but not nearly as fast as Main Memory (about 2GB/s) or Graphics Memory (similar, about 2GB/s).

Evolution of GPUs

The history goes back to the earliest days of workstations (PCs).

GPUs began as simple graphics adapters. CPUs had one or a small number of Cores and Main Memory, and Graphics Adapters simply added a Video Interface to display things on a monitor. The Video Interface accessed the Main Memory over the Bus.

Specific Graphics Memory was added to Graphics Adapters to offload the CPU. This enabled much faster display of graphics, but also, left the CPU and Main Memory free to perform tasks in parallel to displaying information on a Monitor.

GPUs added processors for simple operations, like rendering textures, and implementing "sprites" (small regions of images which moved against a background). These processors were simple and slow compared to CPUs, but they speeded the graphics experience, and further offloaded CPUs.

GPUs added more processors, enabling more complex video operations to be performed on the GPU instead of the CPU. Unlike CPUs, the emphasis was on performing multiple simple operations in parallel.

At the same time, CPUs began adding more cores too, enabling more parallel processing.

This was partly a reflection of the more complex workloads being performed by workstations - many programs running at the same time - and partly due to computer architecture - it became easier to add more cores than to make individual cores faster.

As the complexity of CPU workloads continued to increase and as GPUs became more capable, they began to be used for non-video processing. They were good for offloading compute tasks which were highly parallel.

Graphics Adapter companies like NVidia began making software toolkits like CUDA to facilitate this use of GPUs.

As the usage of GPUs for non-video tasks increased, GPUs added even more processors.

Current GPUs have 100s or processors which can concurrently process 1,000s of threads, enabling highly parallel compute tasks to be accelerated far beyond what is possible on CPUs.

Why GPUs for computation

GPUs optimized for highly parallel computing were perfect for applications like image and video processing, and AI/ML computation. GPUs optimized for highly parallel computing were perfect for applications like image and video processing, and AI/ML computation.

This diagram illustrates CPU processing without a GPU. The green arrows represent units of work, with time going from left to right. Multiple CPU cores can process tasks concurrently, up to about 20 in parallel. The CPU threads share Main Memory.

This diagram shows CPU processing with a GPU. The blue lines show the CPU spawning multiple GPU threads - the yellow arrows - which run in parallel to the CPU threads. Hundreds and indeed thousands and tens of thousands of parallel threads can run in this way. The GPU threads share Graphics Memory, separate from the Main Memory used by the CPU.

The process of starting GPU processing is called "invoking a kernel", where "kernel" is used to refer to the software program running on the GPU (/Device).

NVidia and CUDA and OpenCL

NVidia was founded in 1993, making Graphics Adapters and chips. In 1999 they shipped their first "GPU", coining the term, and heralding the evolution to come. In 2003 they shipped a software toolkit called "Brooks" which provided a way for applications to use GPUs for parallel computing. Then in 2006 they shipped the first version of CUDA, a whole software environment for developing applications which used GPUs. The initial application was of course gaming, which continues to be an important use case today, but it enabled many other uses as well. Including importantly, acceleration of the execution of neural networks, which have revolutionized AI and ML applications.

In 2009 Apple formed a consortium with other GPU manufactures like AMD and Intel, and announced the development of OpenCL, and "open" approach to GPU computing closely patterned on CUDA (which was and remains NVidia-only). Eventually NVidia joined the OpenCL consortium also.

Today you can write applications with CUDA for NVidia GPUs [only], or you can write applications with OpenCL which will run on virtually any GPU [including NVidia]. But CUDA is optimized for NVidia, and NVidia remains the leading GPU vendor. If you've developed an application in CUDA it isn't too difficult to migrate to OpenCL, because of the architectural similarity.

What is CUDA and how do you use it?

CUDA is an environment with four main pieces:

-